Art Critic AI Agent

Building an autonomous AI pipeline for daily creative critique and content generation.

For 9 years straight, I’ve created a work of art every single day as part of my Everyday Project - a project rooted in discipline, experimentation, and evolving creative process. It’s not just a habit, it’s a living archive of how ideas grow over time.

To take it even further, I built an autonomous AI agent that critiques each daily piece, turning every artwork into an experience - analyzing, narrating, and packaging it into shareable audio [Podcast] and video [Youtube/Instagram] content, all fully automated.

This is Everydays meets AI, reflection meets creation. A daily loop of making, critiquing, and sharing.

This is also a step forward in my exploration to automate parts of my life.

Github Repo

To take it even further, I built an autonomous AI agent that critiques each daily piece, turning every artwork into an experience - analyzing, narrating, and packaging it into shareable audio [Podcast] and video [Youtube/Instagram] content, all fully automated.

This is Everydays meets AI, reflection meets creation. A daily loop of making, critiquing, and sharing.

This is also a step forward in my exploration to automate parts of my life.

Github Repo

Workflow Architecture

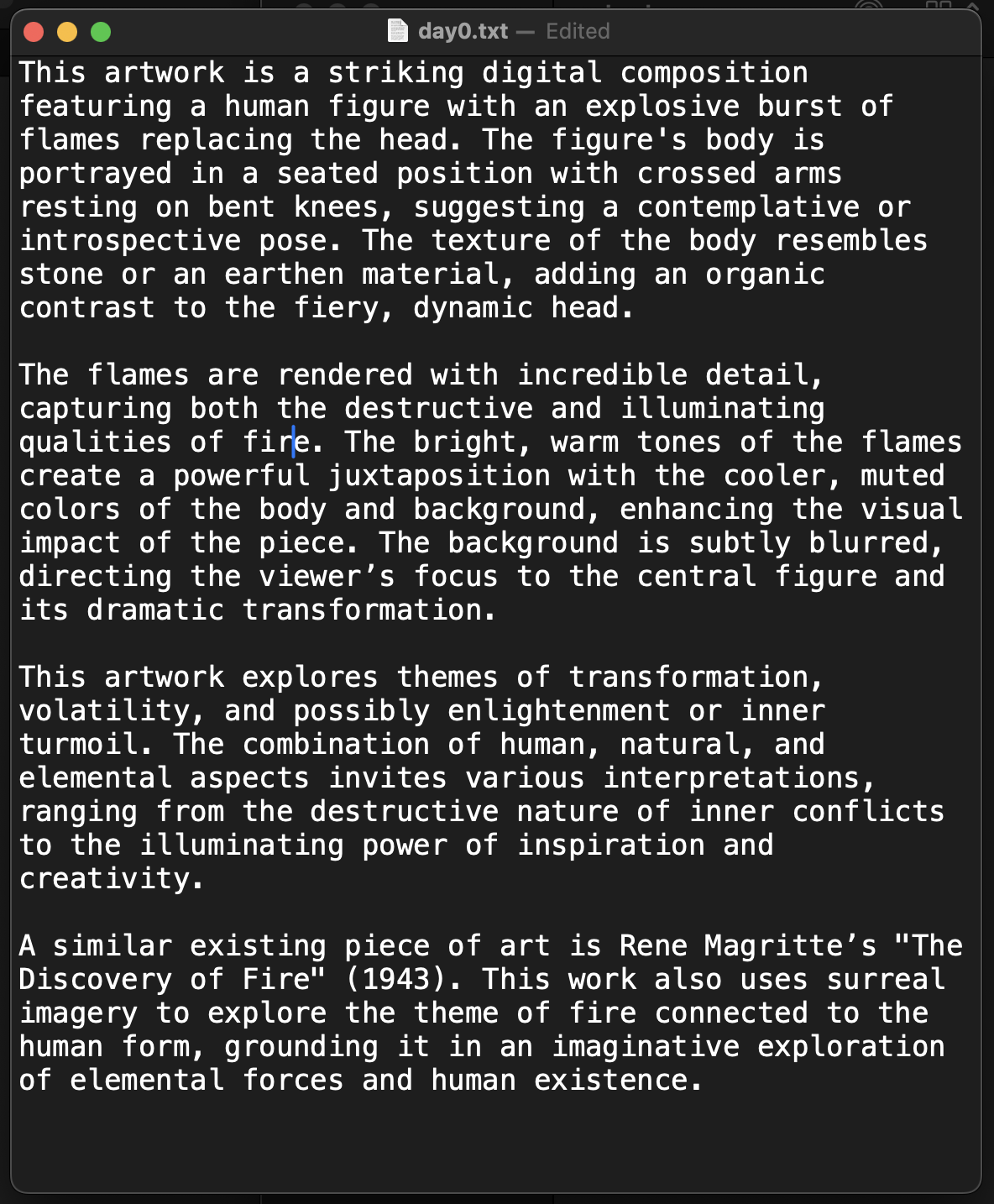

The render image

The render image Text analysing the image

Text analysing the image Text to Audio [Podcasts]

Text to Audio [Podcasts]Combined Video

Pipeline Breakdown

Stage 1 – Text & Audio Generation

Stage 2 – Video Synthesis

-

Input: Daily render image from my ongoing Everyday project.

-

Perception: The agent analyzes the image using OpenAI’s GPT-4o Vision API.

Later replaced by local LLMs (Mistral, Llama3 via Ollama) for a free, offline workflow. -

Reasoning: Generates an art critique text based on the image content.

-

Voice: Converts the critique text to audio narration.

Initially used OpenAI TTS, later integrated free alternatives like Coqui TTS and Piper TTS.Bonus: Suggest an existing artwork related to the artwork critiqued.

Stage 2 – Video Synthesis

- Combines:

1. Daily render image (as static background)

2. Audio narration

- Uses FFmpeg (open-source) to produce the final .mp4 video.

Prompt

“Describe and critique this artwork in detail. Also suggest an existing piece of art that is similar to this based on your analysis. Check and make sure that it is an existing artwork”

Tools & Technologies

|

Takeaways

|